Some 48 million Americans suffer from some degree of hearing loss, according to the Hearing Loss Association of America. While broadcast TV captioning was invented with the deaf and hard of hearing in mind, today this service is readily available and beneficial to all.

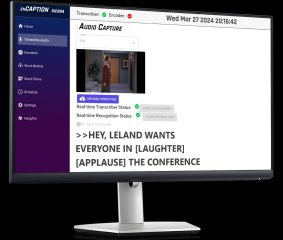

It wasn’t always this way. Prior to 1980, there was no closed captioning technology or U.S. government mandate that broadcasters provide it accurately and reliably. And when it first appeared on our TV screens, it was so onerous to produce that the industry jokingly referred to it as “a black art”. Since then, the volume of live, recorded, and on-demand media requiring captioning has skyrocketed, and so it’s good to know that enCaption from ENCO Systems can automate the end-to-end process in an accurate, reliable, and virtually real-time way.

During the 1970s, PBS—the U.S. public broadcasting network with roughly 170 member stations—worked to advance an innovative technological discovery made by the National Bureau of Standards (NBS), now known as the National Institute for Standards and Technology (NIST). NBS had invented a system whereby timecoded captioning could be hidden within an (analog) NTSC TV signal and when TV sets with special decoders received it, they displayed that info on-screen.

This was the industry’s first foray into converting a video show’s audio portion into on-screen text that displays in sync with the spoken word. For its part, PBS won an Emmy Award from the Academy of Television Arts and Sciences in 1980, and ever since, the technology has been steadily evolving in the following ways:

- In 1976, the FCC reserved Line 21 of the NTSC’s Vertical Blanking Interval (VBI) for closed captions

- Early captioning methods required hardware-based encoders and specialized editing consoles

- Tape-to-tape-based workflows are used solely for offline closed captioning

- VHS or ¾-inch Umatic videotapes could be sent to a third-party transcribing service to create a captions file using a caption-editing console

- By the mid-1990s, legal mandates obligate broadcasters to provide closed captioning on all their programming

- The industry migrates to a digital file-based workflow, and then to automated and now, cloud-based workflows

Another key moment in captioning history was the 21st Century Communications and Video Accessibility Act (CVAA) of 2010. This law ensured that any program that aired on broadcast TV must also have captions when it’s subsequently delivered via the internet and mobile platforms. ENCO Systems has played a strong, proactive role in advancing and perfecting the automated conversion of speech to text over the years. Today, its enCaption systems automate the captioning of both live and non-live media with 99% accuracy and in near-real-time. Want to know more about the state-of-the-art in captioning? Check out our many resources at enco.com.